Screwed Body Reverberations

Table of Contents

Description

Screwed Body Reverberations is a series of auditory experiments by me, Candide Uyanze, that invite users to generate their own “slow and reverbed” version of an audio track using their bodies. For each experiment, users can perform an action to control either the audio rate, reverb, panning, or all three.

Screwed Body Reverberations makes use of several of p5.js sketches, namely, “PoseNet Example 1” by The Coding Train / Daniel Shiffman. PoseNet is a machine learning model used for real-time pose estimation using camera vision.

I’ve also consulted and repurposed the following code references and examples from the p5.js website:

- rate() reference page

- Playback Rate example

- Reverb example

- Reverb reference page

- Pan Sound example

- constrain() reference page

My project uses the following audio samples:

- Study 1: “Chime Sound” by user jgreer via Freesound

- Studies 2 & 3: “poker face” by city rain via Free Music Archive

- Study 4: “bucket of fun” by Fidget via Free Music Archive

- Study 5: “luna rd” by kilowatts via Free Music Archive

Users are invited to repurpose, copy, and experiment with each study to remix a song of their choice.

Please note that sketches loaded on Chrome seem to have trouble loading sound. I recommend using Firefox or Microsoft Edge.

Context

Screwed Body Reverberations, as its name hints, is a reference to the trend of “slowed + reverb” remixes on YouTube. In essence, the audio is, as the name indicates, slowed down, and reverb is added. The process adds a sense of “melancholy” and “digital echo,” further accented by the pairing of “wistful animations” from vintage Japanese anime (Cush 2020). The audiovisual combination is dubbed by journalists Justin Charity and Micah Peters as “millennial aesthetics” (2020).

Here is an example of a “slowed + reverb” remix of Solange Knowles’s hit “Don’t Touch My Hair”:

(for reference, the original song can be heard here)

The internet subculture surrounding this trend is often attributed to Jarylun Moore, known as “Slater,” who rose to prominence after he uploaded a “slowed + reverb” remix of a Lil Uzi Vert song in 2017 that garnered millions of views (Cush 2020). The concept of slowing down songs and adding reverb, however, isn’t new.

The trend has been panned by many as a whitewashed, gentrified version of the 80s era “Chopped and Screwed” remixes pioneered by the late Houston, TX hip hop DJ Robert Earl Davis Jr., known as DJ Screw (Pearce 2020; Charity and Peters 2020; Jefferson 2020). DJ Screw’s sound consisted of bass-heavy, “slowed down beats and repeated phrases” (Hope 2006; Corcoran 2017, 43). The “chopped” in “chopped and screwed” refers to the turntable technique of rearranging and repeating lyrics, credited to Houston’s ‘80s “mixtape king” Darryl Scott (Corcoran 2017, 43).

In contrast, “slowed + reverb” has been criticized as being “far less technical” because it doesn’t require the same chopping technique—taking only a few minutes to recreate on free audio software or websites—yet evokes a similar style and mood as its predecessor (Pearce 2020; Cush 2020).

Pitchfork noted that chopped and screwed music is “a clear forebear of the slowed + reverb sound” (Cush 2020). Slater, who hails from Houston as well, noted DJ Screw as one of his influences in an interview with Pitchfork (Cush 2020). Despite omitting references to DJ Screw or Houston on his channel, Slater is glad to be able to “bring it to a wider audience” (Cush 2020).

The slowed + reverb vs chopped and screwed controversy reached its apex when TikTok user Dev Lemons (@songpsych) posted an explainer video about the trend, crediting Slater as one of the originators while failing to mention DJ Screw (Pearce 2020). The ensuing backlash prompted Slater to reaffirm the late DJ’s influence, and led Dev to be invited as a guest on Donnie Houston’s podcast for a “lesson on DJ screw and Houston Hip Hop culture” (Houston 2020; Pearce 2020).

My aim with Screwed Body Reverberations is to reimagine this remixing practice and allow participants to manipulate the audio sample to their liking actively. Participants can choose whether to mimic the so-called “slowed + reverb” trend, or subvert it by increasing the pitch and speed.

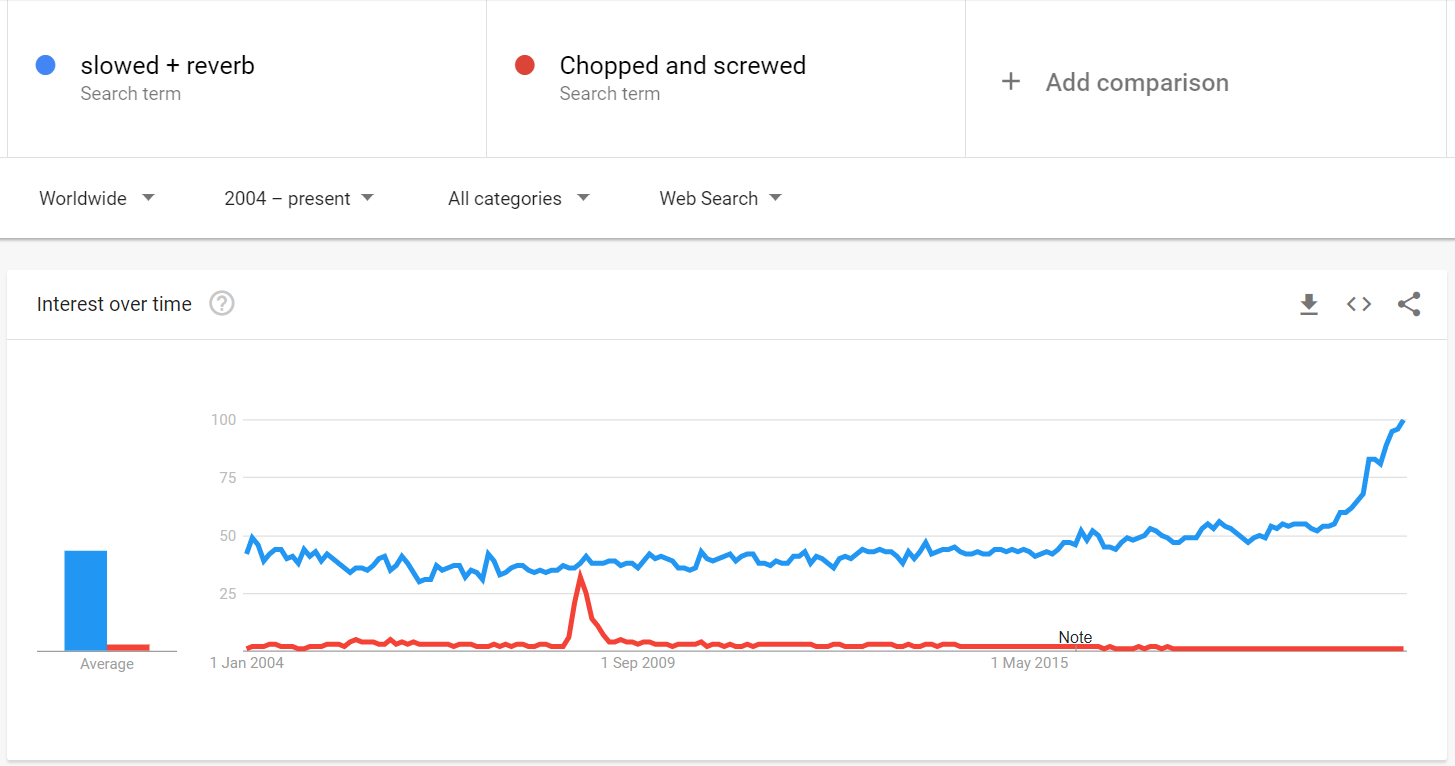

The debate surrounding slowed + reverb brought to mind the artwork I discussed in my Related Works assignment, LEVEL UP: The Real Harlem Shake (Asega, Rosa-Salas, and Cozie 2014). The year that the artwork was exhibited, the Harlem Shake dance trend had obfuscated videos of the original Harlem Shake. Similarly, my query in the Google Trends database revealed that the search interest for “slowed + reverb” has exceeded and outpaced the one for “chopped and screwed.”

Though my goal is not to teach participants how to correctly recreate DJ Screw’s signature style as the LEVEL UP does for the Harlem Shake, I was inspired by how the installation invokes participants to enter a performative space “as an engaged equal as opposed to a passive observer and consumer” (Gomes 2014), as Donnie Houston has done.

Study 1 – Reverb controlled by distance

Brief description, observations, and reflections

My first study was to look at how I could control the reverb using my proximity to the camera. While playing around with Daniel Shiffman/The Coding Train’s PoseNet estimation code, I noticed that there was a red circle that would increase and decrease as I moved away from the camera. This circle measured the distance between both eyes. I figured that would be my best bet for measuring my distance. Thus, I combined this segment of Daniel’s code with the p5.js’s example code for reverb and reference code for reverb.

Try it yourself!

Grab some headphones and enable your webcam. Experiment with getting closer and further away from the camera to control the amount of reverb heard:

https://editor.p5js.org/candideu/present/mBfQ7mK1B

Video of the interaction

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/mBfQ7mK1B

Study 2 – Audio rate controlled by wrist

Brief description, observations, and reflections

My next study was figuring out how to control the audio rate (which changes the speed and pitch of the audio) using the right wrist’s Y location. I made use of Professor Kate’s code that was shared with the class for the PoseNet lecture, and combined it with p5.js’s reference code for changing the rate based on mouse position, and their example code for changing the rate and volume based on mouse position.

I found that using the wrist as a controller in this instance wasn’t ideal. PoseNet had a hard time tracking my wrist (often times, wanting to track my elbow), and I had to keep my hand up to keep the rate at a consistent pace. It’s fun to play around with, but keeping your hand up to keep the same rate can become tiring.

Try it yourself!

Grab some headphones and enable your webcam. Use your right wrist to increase the rate (lower your hand) or d e c r e a s e the rate (raise your hand) :

https://editor.p5js.org/candideu/present/_lKGJfk6B

Video of the interaction

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/_lKGJfk6B

Study 3 – Audio rate controlled by nose location

Brief description, observations, and reflections

Following the iffy results with the wrists, I looked for another body part/point that could change the rate. After more experimentation, I found that the nose was being tracked at a more stable rate than the wrist. It’s also square in the middle of the face, so I didn’t have to back away as much as I had to with the previous sketch.

I definitely enjoyed these results a lot more, and it allowed me to do a full body movement. 💪🏿

Try it yourself!

Grab some headphones and enable your webcam. Use your body’s upright position to increase the rate (lower yourself) or d e c r e a s e the rate (bring yourself higher):

https://editor.p5js.org/candideu/present/nxkiT_vHg

Video of the interaction

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/nxkiT_vHg

Study 4 – Audio panning controlled by nose location

Brief description, observations, and reflections

One of my wild ideas for this sketch was to have individual wind chimes/bells mapped to the screen, and as the user would walk around the screen, they would hear the different sounds. I wanted to make use of the pan function and pan each chime to the left or right, depending on the chime’s location, so that it would sound like the person is surrounded by them. As an avid music listener, I love when sounds and voices are panned to different ears. It almost feels like your brain is being tickled. 😅

Though I opted for this concept instead, I still wanted to try out the pan feature using your body’s location.

Try it yourself!

Grab some headphones and enable your webcam. Move from one side of the screen to the other to pan the audio from left to right:

https://editor.p5js.org/candideu/present/DA7TyB7zk

Video of the interaction

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/DA7TyB7zk

Study 5 – Audio rate controlled by jumping or crouching

Brief description, observations, and reflections

For my last study, I wanted to find a way to control the rate in a measured way. I also knew that I wanted one of my actions to be jumping. Hence, study #5: controlling the audio rate incrementally by jumping. The concept was similar to my previous rate sketches, but incorporated the idea behind Professor Kate’s sketch where she used her wrist to move an ellipse little by little across the screen.

Since the nose is already high up on the body, I decided to track the left shoulder. I think using the shoulder worked in terms of keeping a steady pace, since it usually sits in the middle if the screen (whether you’re further or up close). This way, the rate only changes when the user wants it to.

Though I like the idea of jumping up to slow things down, the main downside with this technique is that the jumps usually happen so fast that the PoseNet wouldn’t track the movement quickly enough, and nothing happens to the rate. Alternately, it’s very easy to speed up the rate beyond what is desired. I adjusted the size of the top and bottom “sensors” / “indicators” for the finale sketch to make things easier.

Try it yourself!

Grab some headphones and enable your webcam. Jump up until the white line reaches the dark red area to s l o w the audio rate, or crouch down below the bottom red area to increase the rate :

https://editor.p5js.org/candideu/present/7j7thKGFn

Video of the interaction

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/7j7thKGFn

FINALE – Screwed Body Reverb

As an extra, I decided to combine the pan using the nose study, the jump to control the rate study, and the proximity to control the reverb study to create a more complete experiment. I remove the tracking dot for the shoulder so that it’s just a line (especially since there is already a tracking ellipse for the nose), I widened the top band to slow down the rate, and made the bottom band smaller, so that it’s not as easy to accidentally speed up the rate.

Try it yourself!

As always: Grab some headphones and enable your webcam:

https://editor.p5js.org/candideu/present/gUhrx0UFz

Jump into the dark red band at the top to slow down the audio rate, or crouch down to speed it up.

Get closer to the camera to reduce the reverb, or back up to increase it.

Walk to the left and right of the screen to pan the audio.

“Edit” Link for Code

https://editor.p5js.org/candideu/sketches/gUhrx0UFz

Bibliography

Asega, Salome, Ali Rosa-Salas, and Chrybaby Cozie. 2014. LEVEL UP: The Real Harlem Shake. Digital Installation. http://www.salome.zone/levelup/74uuay86kiw74ui2yp69x86xywb83x.

Charity, Justin, and Micah Peters. 2020. ‘“Sound Only”: The State vs. Slowed + Reverb’. Sound Only. Accessed 5 October 2020. https://open.spotify.com/episode/2cTemcDOjkAX9DSHwry2FS?si=yNub9HAeTNe1T8ATWOLzfA.

city rain. 2009. Poker Face. Philly Time! Free Music Archive. https://freemusicarchive.org/music/city_rain.

Corcoran, Michael. 2017. ‘Geto Boys and DJ Screw: Where the Dirty South Began’. In All Over the Map: True Heroes of Texas Music, 2nd edition. Number 11 in the North Texas Lives of Musicians Series. Denton, Texas: University of North Texas Press.

Cush, Andy. 2020. ‘How Slowed + Reverb Remixes Became the Melancholy Heart of Music YouTube’. Pitchfork. 7 April 2020. https://pitchfork.com/thepitch/how-slowed-reverb-remixes-became-the-melancholy-heart-of-music-youtube/.

Fidget. 2009. Bucket of Fun. Philly Time! Free Music Archive. https://freemusicarchive.org/music/Fidget/Philly_Time/bucket_of_fun.

Gomes, Nekoro. 2014. ‘Dancing with a Purpose in New Museum’s AUNTSforcamera Exhibition’. SciArt Initiative (blog). 31 December 2014. http://www.sciartmagazine.com/3/post/2014/12/dancing-with-a-purpose-in-new-museums-auntsforcamera-exhibition.html.

Hope, Clover. 2006. ‘Dj Screw: The Untold Story’. Billboard (Cincinnati, Ohio. 1963) 118 (29): 47-.

Houston, Donnie. 2020. Dev Lemons Learns About DJ Screw and Houston Hip Hop Culture. https://www.youtube.com/watch?v=JPl9PJp-22U.

Jefferson, J’na. 2020. ‘DJ Screw’s Legacy Is Being Celebrated After TikTok Teens Tried Gentrifying His “Chopped and Screwed” Style’. The Grapevine. 14 August 2020. https://thegrapevine.theroot.com/dj-screws-legacy-is-being-celebrated-after-tiktok-teens-1844726412.

jgreer. 2016. Chime Sound by Jgreer. WAV. Freesound. https://freesound.org/people/jgreer/sounds/333629/.

kilowatts. 2009. Luna Rd. Philly Time! Free Music Archive. https://freemusicarchive.org/music/kilowatts/Philly_Time/luna_rd.

p5.js. n.d. ‘Constrain() Reference Page’. P5.Js. Accessed 5 October 2020a. https://p5js.org/reference/#/p5/constrain.

———. n.d. ‘P5.Reverb Reference Page’. P5.Js. Accessed 5 October 2020b. https://p5js.org/reference/#/p5.Reverb.

———. n.d. ‘Pan Sound Example’. P5.Js. Accessed 5 October 2020c. https://p5js.org/examples/sound-pan-sound.html.

———. n.d. ‘Playback Rate Example’. P5.Js. Accessed 5 October 2020d. https://p5js.org/examples/sound-playback-rate.html.

———. n.d. ‘Rate() Reference Page’. P5.Js. Accessed 5 October 2020e. https://p5js.org/reference/#/p5.SoundFile/rate.

———. n.d. ‘Reverb Example’. P5.Js. Accessed 5 October 2020f. https://p5js.org/examples/sound-reverb.html.

Pearce, Sheldon. 2020. ‘The Whitewashing of Black Music on TikTok’. The New Yorker, 9 September 2020. https://www.newyorker.com/culture/cultural-comment/the-whitewashing-of-black-music-on-tiktok.

Shiffman, Daniel. 2019. PoseNet Example 1. P5.js. PoseNet. The Coding Train. https://editor.p5js.org/codingtrain/sketches/ULA97pJXR.

Valenzuela, Cristobal, Dan Oved, and Maya Man. n.d. ‘PoseNet’. Ml5.Js. Accessed 5 October 2020. https://learn.ml5js.org/#/reference/posenet.

Comments